Research themes

Active-sensing collectives in the field: a high resolution, multi-disciplinary approach

Echolocating bats manage to navigate in complete darkness using only sound. A lot of our understanding of echolocation comes from investigations of individual bats studied under field and lab settings. Individual echolocation itself is no easy task, one call generates multiple overlapping echoes, and the bat must decide how to filter and process this stream of incoming echoes.

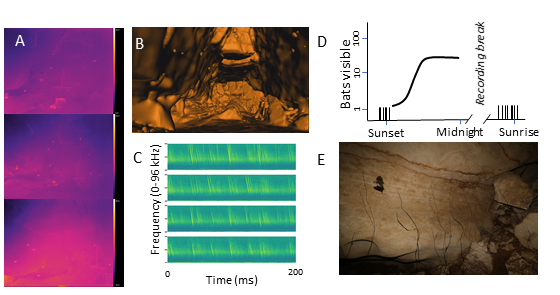

Left: The multi-sensor Ushichka dataset with a multi-channel microphone array, thermal cameras and LiDAR scans of the cave the bats flew in Right: A-C panels showing snippets of recorded data along with bat activity in D,E.

The fact remains, echolocating bats are capable of even more. Bats are one of the most gregarious animals in the world, and are capable of flying in dense swarms in complete darkness only relying on echolocation. However, studying these dense swarms is not trivial - and here we use the latest sensors, techniques and algorithms to 1) reconstruct individual sensory inputs 2) rationalise individual sensorimotor decisions at high spatio-temporal resolution.

To achieve the goals of high-resolution sensory reconstruction and indiviudal decision making we engage in an inter-disciplinary process that consists of 1) building novel field-friendly multi-sensor rigs to capture a holistic picture of active-sensing swarms 2) modelling to reconstruct individual sensory inputs and explain motor outputs and 3) the development of new computational methods to localise sources in audio with overlapping sounds, testing models of sound radiation.

Schematic of in-situ sensory reconstruction of a focal bat (extreme right) with a few neighbours. Knowing the locations of all bats in the cave we can calculate when the calls of the focal bat will reach other bats, and when the echoes from the emitted calls will arrive, and how loud they will. These calculations can be iterated over all individuals in a swarm, and we can thus recreate the holistic, complex 'auditory scene' that active-sensing agents in collectives experience. Image modified from originals by Stefan Greif

The swarm robotics of active-sensing agents

Echolocation is a pretty neat sensory modality. Being a 'single-sensor' modality, echolocation is also thus a data and computationally effective way to navigate your surroundings. This makes echolocation/SONAR a nice candidate for robotics, and specifically swarm robotics.

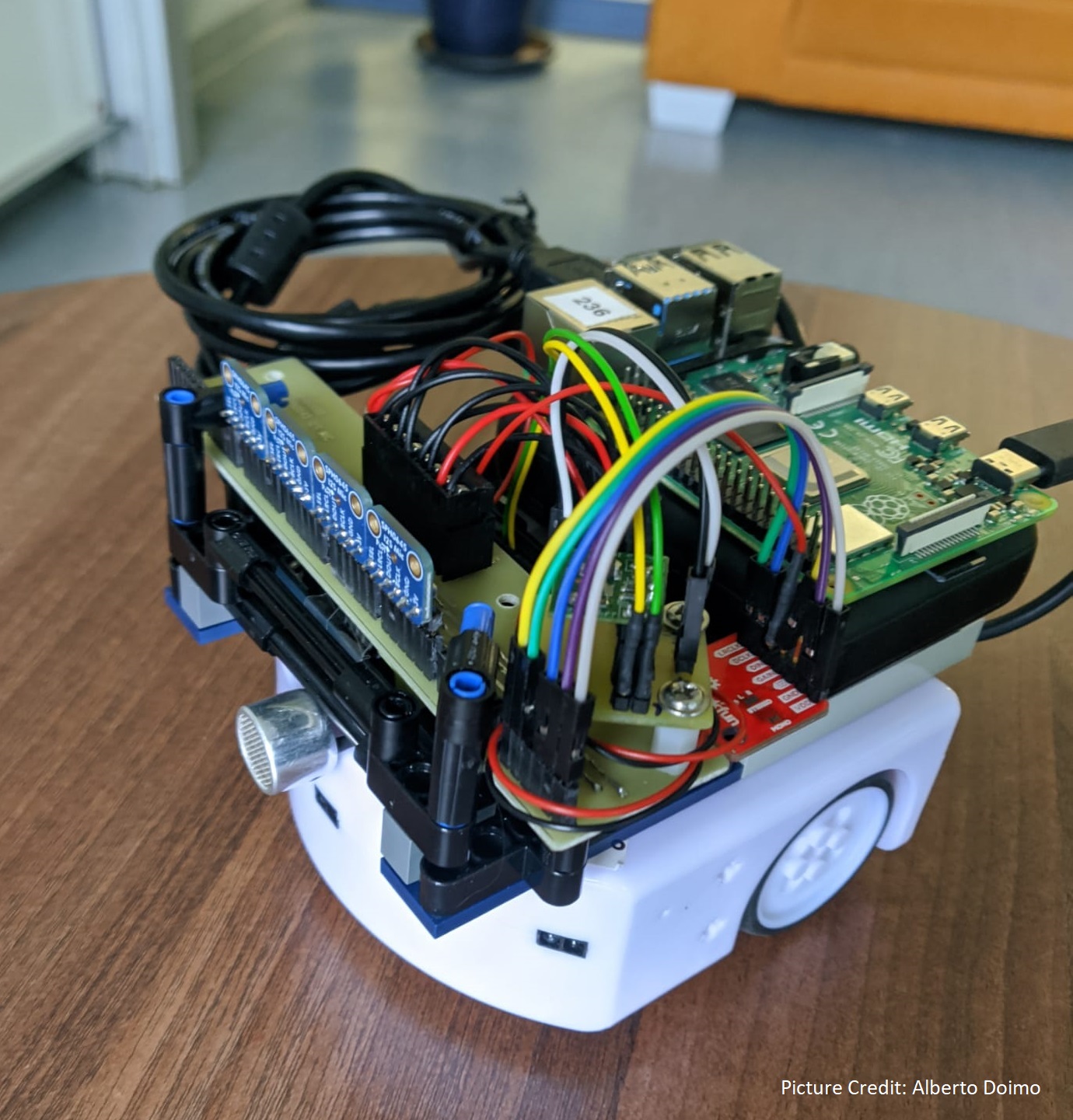

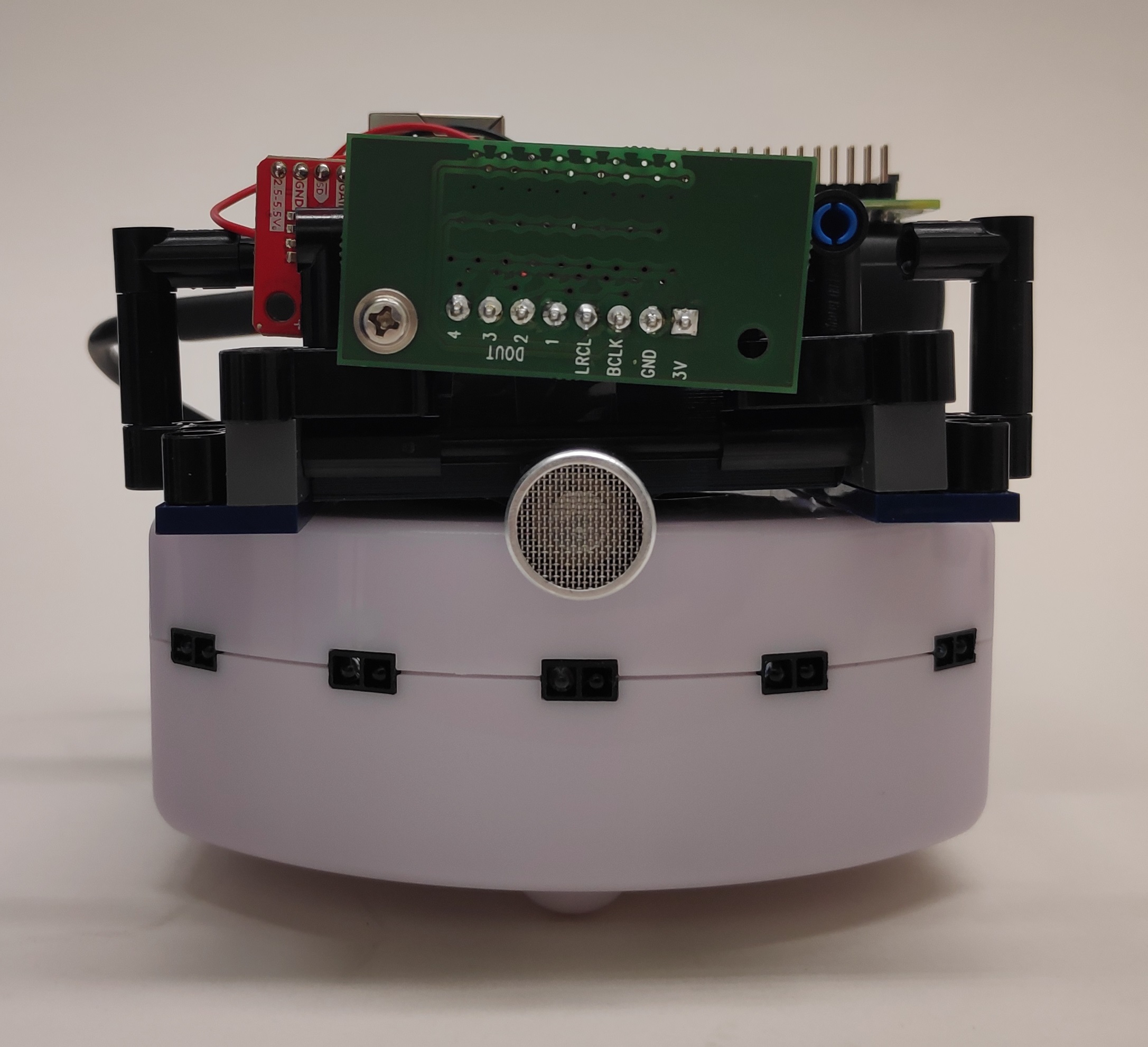

We use swarm robotics to understand active-sensing collectives from two perspectives 1) to explain and rationalise biologically seen behaviours 2) to experiment and discover new strategies to handle navigation in complex echolocation scenes. With our collaborators Andreagiovanni Reina and Heiko Hamann we have been developing the 'Ro-BAT' active-sensing swarm robotics platform.

Left: An early version of the V0 Ro-BAT with a widely separated mic array Right: A later version of the V0 Ro-BAT with a tight microphone array for better direction-of-arrival localisation

Methods development and collaboration

The data we collect comes along with its own quirks, meaning typical analysis tools may not really fit, or exist. A lot of available algorithms and methods are conceived with human or industrial use-cases in mind, and assume their properties as input (human speech, clean geometric shapes, etc.). We of course often end up studying the very oppostie of these human/industrial settings by going out in the night (next to trees, ponds, or in caves), and record data opportunistically. We approach the challenge of our unconventional datasets by either building the tools ourselves, or collaborating to develop and test new methods with our data.

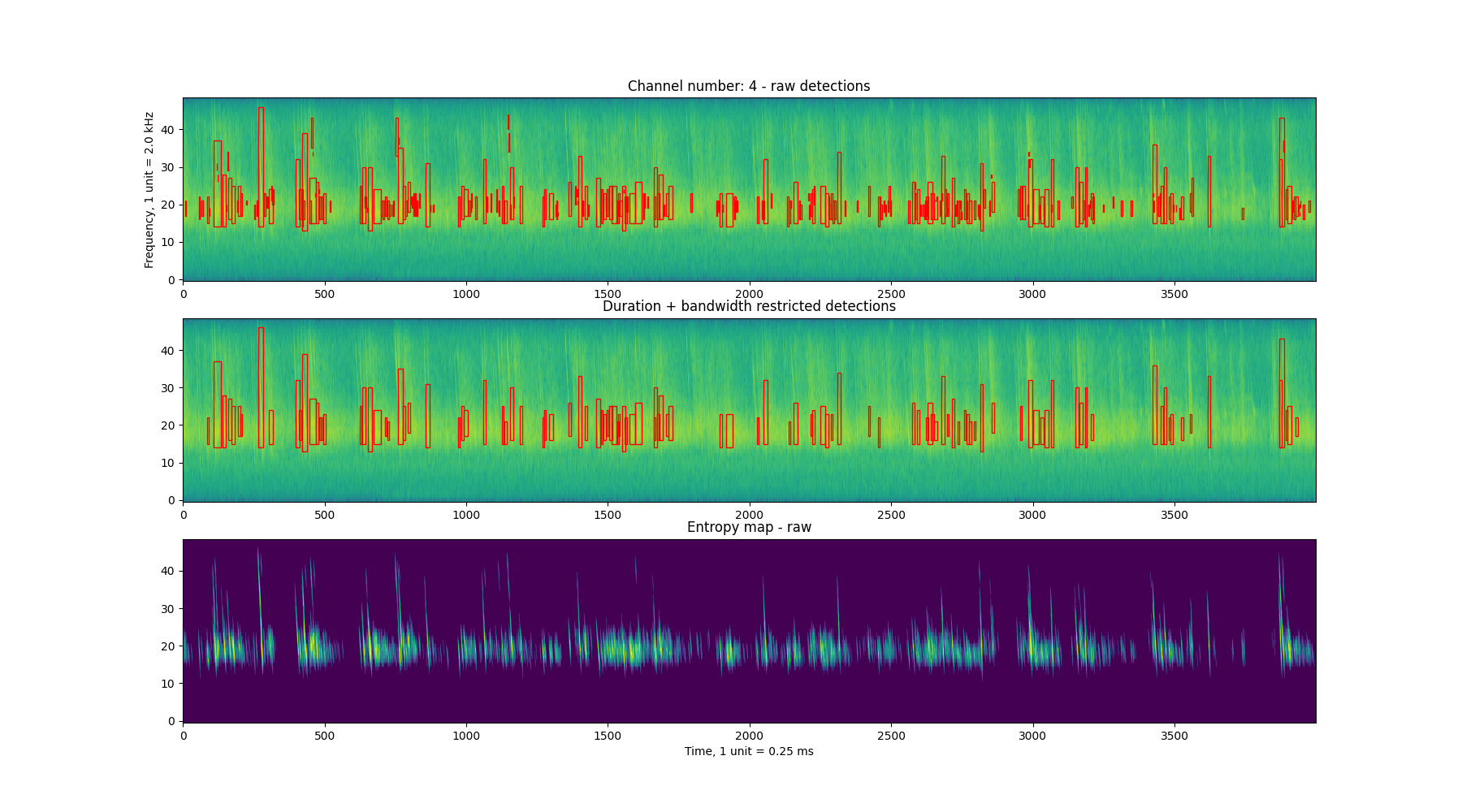

Algorithms for multi-channel, multi-source localisation

When multiple echolocators come together, a problem arises not only for the echolocators themselves, but also to those studying them (us!). How to extract audio data with overlapping sounds is an open problem that is still being looked into. Especially with multi-bat audio, there's sound directionality and reverberation. In collaboration with Kalle Åström (Uni. Lund) and team we are looking into algorithms that work to localise sources in multi-channel audio with overlapping sounds, also while trying to test the efficacy of microphone array self-localisation in the field.

Left: Example of acoustic localisation points from reverberant audio. Right: A simple call detection workflow to perform localisation.

Multi-sensor alignment and workflows

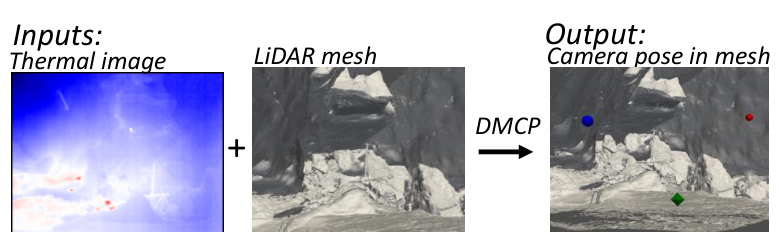

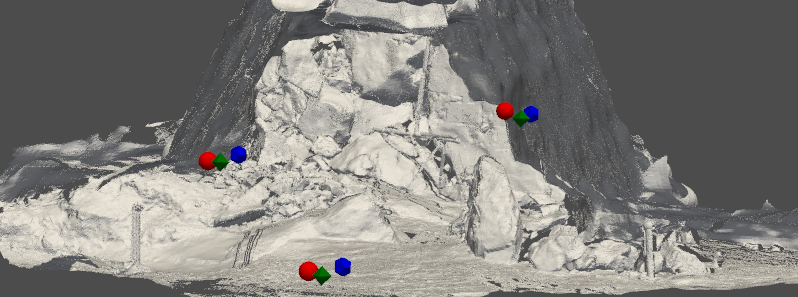

Exploiting the availability of new sensor types, an important focus of the lab is to create a holistic understanding of how active-sensing agents manage in groups. However, the kinds of algorithms and sensors used in 'common' human/industrial contexts of course do not always work. In collaboration with Bastian Goldlücke (Uni. Konstanz) we have been developing a workflow to align thermal camera scenes with LiDAR scans of cave scenes that do not have the typical sharp edges and contrasts that typical datasets have.

Top: The stages of the DMCP algorithm starting with thermal+LiDAR scenes to user-inputs Bottom: The final inferred positions for the three thermal cameras in the cave.

Build, and share: scientific software development

TLDR: Do it once, and try to package it well.

It is a pity that so many (computational) methods are lost in time because it wasn't originally written keeping other users in mind. Here we try to combat this culture of 'forgetting' by striving to share our implementations with the scientific community using time-tested approaches from the software engineering industry (e.g. unit-testing, convention-based docs, package based sharing). For more see the software & code page.